- World of Warcraft

-

Dragonflight 10.2Dragonflight 10.2

-

GoldGold

-

Mythic+ DungeonsMythic+ Dungeons

-

RaidsRaids

-

AmirdrassilAmirdrassil

-

Character BoostCharacter Boost

-

GearingGearing

-

ProfessionsProfessions

-

ReputationsReputations

-

- Call of Duty

-

Modern Warfare 3Modern Warfare 3

-

MW3 Camo BoostingMW3 Camo Boosting

-

MW3 Weapon LevelingMW3 Weapon Leveling

-

Modern Warfare 2Modern Warfare 2

-

MW2 Camo BoostingMW2 Camo Boosting

-

Warzone 2Warzone 2

-

WZ Rank BoostWZ Rank Boost

-

WZ Wins BoostWZ Wins Boost

-

Modern Warfare ZombiesModern Warfare Zombies

-

- Apex Legends

-

Rank BoostingRank Boosting

-

Predator BoostPredator Boost

-

Kills FarmKills Farm

-

Badge BoostBadge Boost

-

Damage BadgesDamage Badges

-

20 kills20 kills

-

4000 damage4000 damage

-

20 kills + 4k damage20 kills + 4k damage

-

- The Finals

-

Leagues BoostLeagues Boost

-

Ranked UnlockRanked Unlock

-

VRs FarmVRs Farm

-

Battle Pass BoostBattle Pass Boost

-

Triangle Twinkle BoostTriangle Twinkle Boost

-

- Diablo 4

- Counter Strike 2

- Overwatch 2

- Valorant

- World of Warcraft

-

Dragonflight 10.2Dragonflight 10.2

-

GoldGold

-

Mythic+ DungeonsMythic+ Dungeons

-

RaidsRaids

-

AmirdrassilAmirdrassil

-

Character BoostCharacter Boost

-

GearingGearing

-

ProfessionsProfessions

-

ReputationsReputations

-

- Call of Duty

-

Modern Warfare 3Modern Warfare 3

-

MW3 Camo BoostingMW3 Camo Boosting

-

MW3 Weapon LevelingMW3 Weapon Leveling

-

Modern Warfare 2Modern Warfare 2

-

MW2 Camo BoostingMW2 Camo Boosting

-

Warzone 2Warzone 2

-

WZ Rank BoostWZ Rank Boost

-

WZ Wins BoostWZ Wins Boost

-

Modern Warfare ZombiesModern Warfare Zombies

-

- Apex Legends

-

Rank BoostingRank Boosting

-

Predator BoostPredator Boost

-

Kills FarmKills Farm

-

Badge BoostBadge Boost

-

Damage BadgesDamage Badges

-

20 kills20 kills

-

4000 damage4000 damage

-

20 kills + 4k damage20 kills + 4k damage

-

- The Finals

-

Leagues BoostLeagues Boost

-

Ranked UnlockRanked Unlock

-

VRs FarmVRs Farm

-

Battle Pass BoostBattle Pass Boost

-

Triangle Twinkle BoostTriangle Twinkle Boost

-

- Diablo 4

- Counter Strike 2

- Overwatch 2

- Valorant

- World of Warcraft

-

Dragonflight 10.2Dragonflight 10.2

-

GoldGold

-

Mythic+ DungeonsMythic+ Dungeons

-

RaidsRaids

-

AmirdrassilAmirdrassil

-

Character BoostCharacter Boost

-

GearingGearing

-

ProfessionsProfessions

-

ReputationsReputations

-

- Call of Duty

-

Modern Warfare 3Modern Warfare 3

-

MW3 Camo BoostingMW3 Camo Boosting

-

MW3 Weapon LevelingMW3 Weapon Leveling

-

Modern Warfare 2Modern Warfare 2

-

MW2 Camo BoostingMW2 Camo Boosting

-

Warzone 2Warzone 2

-

WZ Rank BoostWZ Rank Boost

-

WZ Wins BoostWZ Wins Boost

-

Modern Warfare ZombiesModern Warfare Zombies

-

- Apex Legends

-

Rank BoostingRank Boosting

-

Predator BoostPredator Boost

-

Kills FarmKills Farm

-

Badge BoostBadge Boost

-

Damage BadgesDamage Badges

-

20 kills20 kills

-

4000 damage4000 damage

-

20 kills + 4k damage20 kills + 4k damage

-

- The Finals

-

Leagues BoostLeagues Boost

-

Ranked UnlockRanked Unlock

-

VRs FarmVRs Farm

-

Battle Pass BoostBattle Pass Boost

-

Triangle Twinkle BoostTriangle Twinkle Boost

-

- Diablo 4

- Counter Strike 2

- Overwatch 2

- Valorant

- World of Warcraft

-

Dragonflight 10.2Dragonflight 10.2

-

GoldGold

-

Mythic+ DungeonsMythic+ Dungeons

-

RaidsRaids

-

AmirdrassilAmirdrassil

-

Character BoostCharacter Boost

-

GearingGearing

-

ProfessionsProfessions

-

ReputationsReputations

-

- Call of Duty

-

Modern Warfare 3Modern Warfare 3

-

MW3 Camo BoostingMW3 Camo Boosting

-

MW3 Weapon LevelingMW3 Weapon Leveling

-

Modern Warfare 2Modern Warfare 2

-

MW2 Camo BoostingMW2 Camo Boosting

-

Warzone 2Warzone 2

-

WZ Rank BoostWZ Rank Boost

-

WZ Wins BoostWZ Wins Boost

-

Modern Warfare ZombiesModern Warfare Zombies

-

- Apex Legends

-

Rank BoostingRank Boosting

-

Predator BoostPredator Boost

-

Kills FarmKills Farm

-

Badge BoostBadge Boost

-

Damage BadgesDamage Badges

-

20 kills20 kills

-

4000 damage4000 damage

-

20 kills + 4k damage20 kills + 4k damage

-

- The Finals

-

Leagues BoostLeagues Boost

-

Ranked UnlockRanked Unlock

-

VRs FarmVRs Farm

-

Battle Pass BoostBattle Pass Boost

-

Triangle Twinkle BoostTriangle Twinkle Boost

-

- Diablo 4

- Counter Strike 2

- Overwatch 2

- Valorant

NVIDIA’S RISE:

NVIDIA needs no introduction. The company, who made the popular Geforce series, went through many hurdles and ups and downs to get where it is standing now. NVIDIA wasn’t a company that had millions of dollars to start s business. Just like ATI, they earned what they have now.

Ever since the launch of RIVA 128, Nvidia became the bullet train, with no one to stop them. Every marketing step they took was well calculated and deeply thought-out.

NVIDIA’s success:

Nvidia, the prime reason for the fall of 3DFX, now owns all the intellectual property of 3DFX. 3DFX was the largest company making Voodoo Graphics card. Everyone, specially Gamers, liked their product. Today, Nvidia now has Mobile Go processors for Toshiba and other notbooks while many other OEM chose nVidia over Companies like S3 and ATI for their High Performance Desktop systems.

This article will answer, how did nVidia became so big? were there any hurdles?, how did NVIDIA Dealt with them?, Are their any facts which most people don t know?

Beginning of NVIDIA:

Unlike ATI, Nvidia was started by three industry Veterans in 1993, Jen-Hsun Huang, NVIDIA President and CEO, had been the Director of Coreware at LSI Logic. Curtis Priem, NVIDIA Chief Technical Officer, had been the architect for the first Graphics Processor for the PC, the IBM Professional Graphics Adapter, and GX graphics chips at Sun Microsystems. Chris Malachowsky, VP of Hardware Engineering, was a Senior Staff Engineer for Sun Microsystems, Inc., and was co-inventor of the GX graphics architecture.

Jen-Hsun Huang

At that time, CD-Roms and 16bit Sound cards were growing in popularity, Graphics Accelerator supported full motion video, photo-realistic as well, high colour 16 bit and high color depth. In this environment nVidia developed it s first chip with the help of SGS-Thomson Microelectronics (ST Micro).

January 1995: NV1 and STG 2000:

In 1995, NVIDIA launched 2 chips namely NV1 and STG 2000. NV1 had Vram while SGT had Dram. NV1, being the first product of NVIDIA was way ahead of its time. With a complete 2D/3D Graphics Core, 350-MIPS audio playback engine, and an I/O Processor, This Chip’s entrance shocked everyone in the graphics market. Cards like Diamond Edge 3D featured NV1.

NVIDIA’s first product wasn’t only about graphics, but it was integrated into a playback only Soundcard, Which was quite popular at that time. With 32 concurrent audio channels for 16-Bit CD quality and simple 3D Sound, NV1 was more then just a PCI Soundcard, with 6 MB patch on the Chip for Wav Files, was even certified by Fat Labs.

NV1 also featured a Direct Support for Sega Saturn Game Pad rather than the traditional 15 Pin Port. The support for Sega’s Game like Virtua fighter and Virtua Cop was added

Complications:

When NV1 was released, many of the Graphics standards we now know weren’t decided. Since Polygons were not decided to be used as standard for hardware Calculation, NVIDIA chose to implement Quadratic Texture Maps and not polygons. Since today’s card use very small polygons to make a structure, NVIDIA used Quadratic Texture Maps, which means they used the curved side of polygons, which means less calculation giving a performance boost over its rivals.

Microsoft nearly destroys NVIDIA:

Not long after NV1’s arrival, Microsoft Finalized Direct3D for next Generation of 3D Graphics and guess what? It used the Polygons as the standard not Quadratic Texture Maps. Despite the NVIDIA and Diamond’s best efforts, they couldn’t compel the developers to develop a Non-Direct3D Compliant Games and Softwares. Diamond even started bundling Sega Joypad with the NV1, but no use.

With Direct 3D, Microsoft nearly destroyed Nvidia. Oem Builder refused to produce boards with non-Direct3D compliant chips. NVIDIA knew they could not build totally new 3D accelerator to support Direct3D in time. So they took a defensive step and laid some staff off of its workforce.

NV Console Chip:

NVIDIA’s financial savior came when Sega decided to help NVIDIA. Sega’s Developers were very familiar with Quadratic Textures while Japanese Developers didn’t care about the standards as long as the new thing brought performance. Sega funded the research for NV2, It is correct to say NVIDIA wouldn’t exist if it wasn’t for Sega. Later the Sega decided to drop NV2, little is known about NV2 and timing of Events. But we do know that the chip existed and was in collaboration with the Sega due to the fact that NV1 boosted the sales of Saturn accessories and Sega Products. For Dreamcast, Sega first went to 3DFX for a decent Chip and later Dreamcast’s Graphics technology went to PowerVX.

The Plan:

NVIDIA learned it’s mistake, that not going through standards they’ve made a big mistake. Though quadratic texture mapping was ingenious and better then the usage of simple polygons, NVIDIA alone couldn’t make a big difference. So Nvidia decided that it would be the first company not going into proprietary native mode-API. 3DFX had GLIDE, PowerVX and PowerSGL. ATI had 3DCIF. NVIDIA proclaimed that Direct3D is it’s Native mode. This helped NVIDIA in lots of ways. Software Developer now can write a code that would work on more chips, compared to single architectures of single brand, while on the other hand NVIDIA had a big bad marketing power of Microsoft behind its product.

NVIDIA also decided that it will not make any other Single Chip multimedia accelerators instead they concentrated on 2D/3D graphics. They’ve also made a company policy, which was 6-months product cycle which was a safety net to prevent the company to make any company ending decisions.

1997: RIVA 128

Comeback:

NVIDIA’s development of NV3 or RIVA 128 was more or less complete; at this stage NVIDIA brought back some of the employees which it fired due to financial issues.

When Riva 128 was announced, everyone thought it was just a hoax. Because no one heard from the company since the NV1, or 2 years back, with no knowledge of NV2 (since it was developed secretly). NVIDA claimed that it has achieved 100M/sec fill rate with 128 bit bus, performance better then the Voodoo Graphics Card.

If that wasn’t enough, NVIDIA also said that it’s card will be the first ever to support AGP architecture (not fully) and incorporate full hardware triangle setup engine.

The RIVA 128 did came out as expected on schedule while it lacked the image quality like that of voodoo but since it’s full support for 2D/3D graphics as well as being the low cost, OEMs welcomed it with open arms. OEMs like DELL, Gateway and MICRON were using it on their boards while the retail version was on sale by the companies like Diamond, Asus and ELSA.

Product Cycle:

As mentioned earlier, NVIDIA adopted a six month product cycle period which allowed it to release it’s product early as possible while on the other hand be on safe side to be updated as possible. After six months of the release of RIVA 128, NVIDIA launched a RIVA 128ZX. This card had a frame buffer of 8MB as compared to RIVA 128, while on the other hand due to the growing popularity of OPENGL, NVIDIA added support to OPENGL in it’s Chip. This also marked the NVIDIA’s joint collaboration with Taiwanese Foundry called TSMC.

NVIDIA’s Six month product cycle proved to be successful while 3DFX single chip solution, Voodoo Banshee, had so many delays. It was release on September 1998.

1998: Riva TNT

1 month after the announcement of RIVA 128ZX, NVIDIA also announced their next generation of Graphics adapters, RIVA TNT. The Twin Texel engine was a dual-pixel which means 32-bit color pipeline which allowed the TNT to apply two textures to a single pixel or process two pixels per clock cycle to bring the fill rate up to 250Mpixels/sec. That was also a roar from NVIDIA to warning its Rivals, Step up or be destroyed.

Riva TNT didn’t just had that much power, but it was the most impressive chip announced. With noticeable improvement to the image quality, TNT brought True color support and 24-bit Z Buffer with 8-bit stencil support and Anisotropic Filtering, it also had per pixel MIP mapping. The Video Memory was extended to 16MB while the transistor count was the same as that of Intel Pentium 2.

The company announced the RIVA TNT in late march, while the Clock speed was reduced to 90 MHz which resulted in fill rate of 180 M pixels/s.

When Riva TNT was launched, It proved to the world that 3DFX wasn’t the only company with Hardcore 3D Solutions, While the TNT Matched with Voodoo2 in terms of performance, the Voodoo2 had the advantage of early release then that of TNT. Because of the fact that announcement of the TNT came too early then it’s expected launch. But nonetheless, NVIDIA’s TNT had improved Image Quality (that is 32 bit) and most of all NVIDIA Scored major OEMs and even Became the official 3D Graphics of Professional Gamer League.

Product Cycle: March 1999

TNT2:

In March 1999 at the Game Developer’s Conference, NVIDIA launched two Products: TNT2 and budget Vanta. TNT2 was Strictly 0.25 Micron Variant of TNT Classic with higher Clock Speed.

It wasn’t only the clock speed that was high, but the transistors count was also high, Ranging between 8 million to 10.5 million. TNT2 also featured Frame buffer of 32 MB while on the other hand had the Digital Flat Panel Support. NVIDIA promised a fill rate of 300 MPixels/sec in its TNT2 Ultra Variant. TNT2 was rushed to the market within two months of its announcement, so it can go head on with the Voodoo3.

3DFX was loosing support due to the fact that Developers were loosing faith in Glide and switching to Direct3D and OpenGL. While Voodoo3 had everything, It may have defeated TNT2 in terms of frame rate. But many chose TNT2 due to the fact that it had 32-Bit support which means awesome image quality.

Product Cycle: 1999

Geforce 256:

In august 1999, at Intel’s Developer Forum, Nvidia Announced a product that was destined to change the course of history. Codename NV10, Geforce 256 brought Revolutionary features to the PC industry.

The Geforce 256 was the first graphics card that offered onboard shipping of transformation and lighting. This means more load from the CPU, was laid off. It incorporated a new chip with 4 pixel pipelines at 120 MHz with a theoretical 480 Mpixel/s Fill rate, that is at maximum. The chip also supported DDR and SDRAM. With DDR having Double Data bandwidth, Geforce 256 was certainly a leader. And with an improved image quality and features like cube-environment mapping and Dot-3 bump mapping, the Geforce 256 made its point that NVIDIA has prevailed.

Furthermore, Geforce 256 was the first card ever to support HDTV compliant motion compensation and Hardware alpha-blending. In November 1999 NVIDIA released Qudro, High-end Hardware for Professional needs, based upon the revolutionary Geforce 256.

Product Cycle: 2000

When GDC came around in March 2000, no one was expecting that NVIDIA would announce a product, with S3 Savage and ATI MAXX Fury being the only product that falls between Geforce 256 series, while Voodoo5 was no where to be seen, NVIDIA announced that X-Box will feature NVIDIA’s made GPU. Every one was shocked. NVIDIA returned to its roots, the Console market. With the $200 Million advance payment and a marketing opportunity it was a win win situation for NVIDIA as long as it could come up through the expectations. And now we can see the fruits of labor of NVIDIA, the Xbox, an ingenious product, without which most of us can’t think of living.

1 Month after the XBOX announcement, NVIDIA Released:

Geforce2 GTS:

The Geforce2 was something, Everyone reading this article ever owned a Geforce2 GTS knows that. Technically, the Geforce2 GTS almost doubled the pixel fill rate and quadrupled the texel fill rate then that of Geforce 256, while increasing the clock speed and adding multi-texturing to each pixels pipeline. The GeForce2 GTS also added S3TC, FSAA and improved MPEG-2 motion compensation.

While ATI, on the other hand, A Canadian company was busy working on their own new generation of products, The Radeon Family. The Radeon was supposed to be equal to Geforce2 GT’s Performance, while having similar features and some additional features like environment mapped bump mapping, DirectX 8 features and Transformation, Clipping, and Lighting unit.

2000: Geforce2 MX:

Shortly after Geforce2 GTS reached the Shelves, NVIDIA Launched a mainstream product called Geforce2 MX, cutting pixel pipeline by 2, this product was made cost effective. While 2 new features were added, first Geforce2 MX incorporated NVIDIA’s twinview Feature, in which you can have two displays through one card just like Maxtor’s dual head. Other was certain Silicon revisions were taken so that Geforce2 MX could work on MAC. In January 2001, the GeForce2 MX was selected as the default high-end graphics solution for the Apple Power Macintosh G4. at this time Radeon just hit the market plagued by driver issues.

July 2000: Quadro2 Pro and Quadro2 MXR:

In July 2000, NVIDIA released two upper market cards, made for professionals, Quadro2 Pro based on Geforce2 GTS and Qudro2 MXR based on Geforce2 MX.

August 2000: GeForce2 Ultra:

NVIDIA knew that it’s Geforce2 GTS and Geforce2 MX could hold the ground against future threats like ATI Radeon and Voodoo 4/5, Since their upcoming chip NV20 wasn’t ready, it had few complications, they released a higher clocked version of Geforce2 GTS, namely Geforce2 Ultra. While NVIDIA didn’t wanted to repeat the same mistake as it did in the start, so they waited till the release of final DirectX 8.

November 2000: GeForce2 Go:

At the end of the year, NVIDIA launched its first ever Mobile Graphics chip, namely Geforce2 Go, for the mobile market. Also the first ever graphics chip with hardware transformation, clipping, and lighting. In order to save power as well as cost, Geforce2 go had 720p HDTV motion compensation engine and a reduced clock speed resulting in a pixel fill rate of 286Mpixels/sec and texel fill rate of 572Mtexels/sec. While its rival had one pixel pipeline and three texture read units resulting in low 3D detail but also low power consumption and smooth cost.

December 2000: Fall of Titan:

After four years of battling with NVIDIA, 3DFX fell, fell like an injured Elephant. With delays of Voodoo5, 3DFX couldn’t bear it and followed the instructions of the board of directors and started dissolving itself. All the intellectual assets and other assets were sold to NVIDIA which included 3dfx’s research on anti-aliasing, Gigapixel’s tile-based rendering technologies, and technology still being developed. In addition, approximately 100 former 3dfx employees joined NVIDIA bringing even more talent to the company.

YEAR 2000: Year of Graphics Card:

Year 2000, saw NVIDIA launching 6 products based on 2 graphics cards, while the company also divided Workstation cards from the main gamer cards.

The real surprise came from ATI, the release of Radeon, propelled the company to the next level of competition, The battle between ATI and NVIDIA for the crown of best graphics card making company yet.

Year 2001: Geforce 3, Codename: NV20

Year 2001, NVIDIA released another long awaited card known as Geoforce 3. The Geforce 3 was a sure winner, the fastest card around, Gamer liked and everyone else too. One variant which was released was TI500. Clocked at 240MHz Core/500MHz Memory, the Geforce 3 did put NVIDIA at the heights never before imagined by NVIDIA. It als paved the way for future cards, since Geforce 3 chip had potential and NVIDIA intend to use it. The Geforce 3 opened the door to Overclocking world as we know today.

Geforce 3 originally offered, A total of 57 million transistors, performance up to 46 to 76 Gigaflops, 0.15 micron process, Vertex shader technology, Pixel shader technology, LightSpeed Memory Architecture and Multi-Sampling Full-Scene Anti-Aliasing. The Video Ram was as high as 128 MB in these cards.

Year 2002: Geforce 4, Codename NV25(Ti) & NV17(MX)

Year 2004, marked the launch of Geforce 4, the product launched in 2 flavors, Ti Series and MX series. The Ti series being the dominant over all in the category, came in 4200, 4400, 4600 and 4800 flavours. The Video Ram was as high as 128 MB in these cards.

TI Featured:

- 63 million transistors (only 3 million more than GeForce3)

- Manufactured in TSMC’s .15 µ process

- Chip clock 225 – 300 MHz

- Memory clock 500 – 650 MHz

- Memory bandwidth 8,000 – 10,400 MB/s

- TnL Performance of 75 – 100 million vertices/s

- 128 MB frame buffer by default

- nfiniteFX II engine

- Accuview Anti Aliasing

- Light Speed Memory Architecture II

- nView

MX came in 400, 420, 440 & 460. The overview is that, the Geforce 3 TI cards are far more advance then the MX ones. So, the name Geforce 4 fooled most of them in thinking that since it’s the Geforce 4 card, It may have some power but the fact is it doesn’t even properly supports Directx 8.1.

MX Featured:

- Based on GeForce2 MX GPU

- Manufactured in TSMC’s .15 µ process

- Chip clock 250 – 300 MHz

- Memory clock 166 – 550 MHz

- Memory bandwidth 2,600 – 8,800 MB/s

- Accuview Anti Aliasing

- Light Speed Memory Architecture II, stripped down version

Year 2002: Geforce FX: Codename: NV 30

It was late 2002, around November, when NVIDIA launched their FX Series of cards, at first 5800 and 5800 ultra were available, then variants like 5200, 5200 ultra, 5500, 5600, 5600 Ultra, 5600 XT, 5700, 5700 Ultra, 5700 LE, 5900, 5900 Ultra, 5900 XT, 5950 Ultra and 5950 Ultra Extreme editions came, either released by NVIDIA or by Launch Partners like ASUS and EVGA.

Features:

- 13 Micron manufacturing process

- 125 million Transistors

- AGP 8X

- 125 Million Transistors

- 8 Pixels Per Clock

- 1 TMU/Pipe (16 Textures/Unit)

- Full DX9 Capability

- 2 x 400MHz RAMDAC’s (both internal)

- 128-Bit Memory Interface

- 256-Bit GPU

- 128MB/256MB Memory Size Support

NVIDIA’s FX Series was meant to supply a significant series of cards that can fall between anyone’s budget. Like 5200, was price under $100 in around couple of weeks. These cards were made against 9700s of ATI, but the latter series like 5950 UE took headon against ATI’s 9800 XT’s. Some of these series of cards are PCI-E compatible, like 5900 Ultra, PCX 5750, 5300 etc.

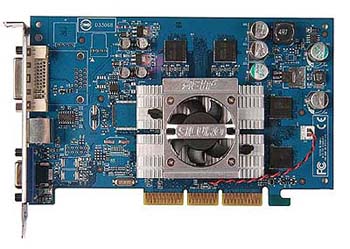

Geforce 6x series: Codename NV40:

NVIDIA’s fastest card todate. My Favorite card falls in this series too. The E-VGA Geforce 6800 Ultra Extreme Edition. The variants in the 6x Series are, 6200, 6600 GT, 6600 Ultra, 6800 GT, 6800 Ultra and 6800 ultra extreme edition. These cards are supposed to be for PCI-E architecture, but since the PCI-E mobos are getting cheaper, these PCI-E versions of cards (good ones) are getting extinct. While the favorite card in the whole 6x series is 6800GT. This card with proper cooling solution can be over clocked to 6800 Ultra’s clock speed. The 6200 card is actually turbo charge card, in these cards your system memory is being shared to give you maximum performance.

6800 Ultra Extreme Edition features:

- Core Clock Speed 450 MHz

- Memory Clock Speed 600 MHz

- Memory Bandwidth 35.2 GB/sec

- Vertices per Second 600 Million

- Memory Data Rate 1100 MHz

- Pixels per Clock (peak) 16

- Textures per Pixel 16

- RAMDACs 400 MHz

- Super scaler 16 pipe architecture

- CineFX 3.0 Engine

- Dual DVI Connectors

- 64-bit texture filtering and blending

- 128-bit studio precision computation

Upcoming and Recent Products:

SLI:

Ah yes NVIDIA’s SLI, SLI means Scalable Link Architecture. NVIDIA’s Lottery Ticket. This is a unique technology developed by NVIDIA by which we can put two graphics card together on a same system to increase the performance. This Technology is the future of ATI. It uses PCI-E Architecture to bring two same NVIDIA PCI-E Cards joined by connector, working together to perform calculations, which results in increased performance. The SLI System has scored highest in the 3D Mark 2005, which is 10,000 +.

PS3:

Sony and NVIDIA has struck upon a deal by which NVIDIA will be making a GPU for Sony’s next generation console PS3. PS3 Powered by Cell, The Cell processors if put in a rack, they can easily come in the list of top 500 Super computers with 16 Teraflops. PS3 is scheduled to arrive in mid/late 2006.

Conclusion:

Although ATI is the oldest and truly one of the first Graphics adapter manufacturer but NVIDIA on the other hand knows that it defeated a giant like 3DFX it can defeat anyone. One thing is noticeable in NVIDIA’s History, all the products it ever launched except NV1, had 6 months interval between them. Fall and Spring. These are the NVIDIA’s Launching Season. Like recently 6200 is being launched in coming spring. NVIDIA, being younger then ATI, worked very hard to get where it is standing now. NVIDIA’s Decision and strategies worked like a charm, that’s why all the top level gaming market belongs to NVIDIA.